Debate accelerates on universe’s expansion speed

A puzzling mismatch is plaguing two methods for measuring how fast the universe is expanding. When the discrepancy arose a few years ago, scientists suspected it would fade away, a symptom of measurement errors. But the latest, more precise measurements of the expansion rate — a number known as the Hubble constant — have only deepened the mystery.

“There’s nothing obvious in the measurements or analyses that have been done that can easily explain this away, which is why I think we are paying attention,” says theoretical physicist Marc Kamionkowski of Johns Hopkins University.

If the mismatch persists, it could reveal the existence of stealthy new subatomic particles or illuminate details of the mysterious dark energy that pushes the universe to expand faster and faster.

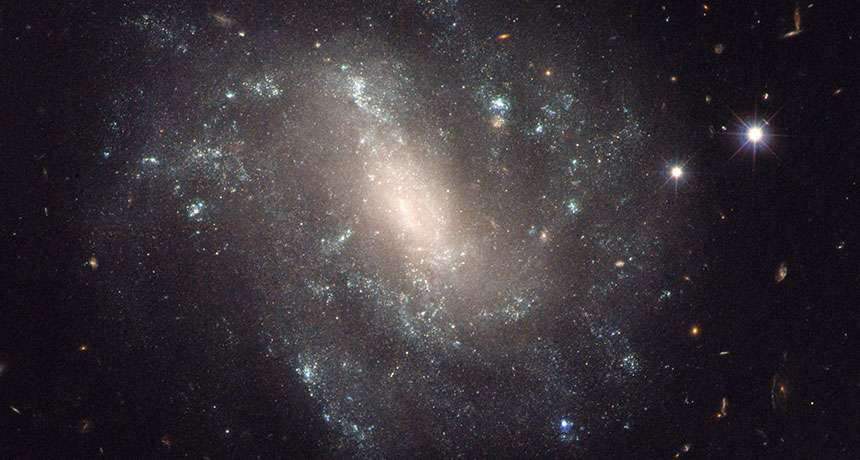

Measurements based on observations of supernovas, massive stellar explosions, indicate that distantly separated galaxies are spreading apart at 73 kilometers per second for each megaparsec (about 3.3 million light-years) of distance between them. Scientists used data from NASA’s Hubble Space Telescope to make their estimate, presented in a paper to be published in the Astrophysical Journal and available online at arXiv.org. The analysis pegs the Hubble constant to within experimental errors of just 2.4 percent — more precise than previous estimates using the supernova method.

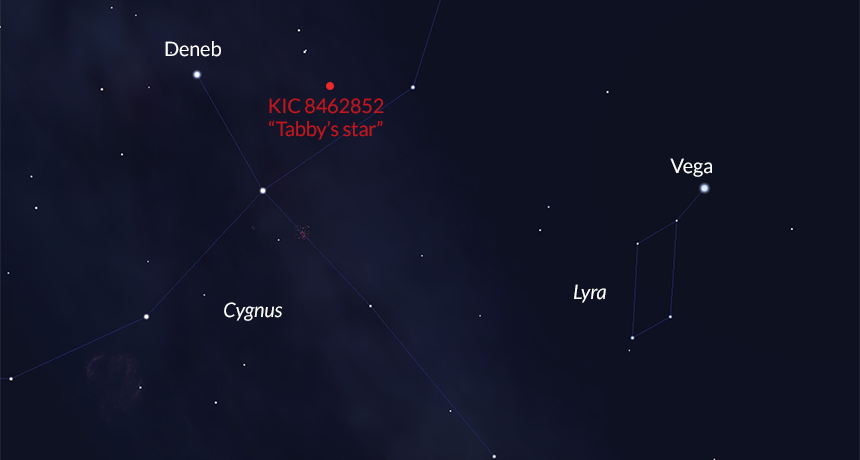

But another set of measurements, made by the European Space Agency’s Planck satellite, puts the figure about 9 percent lower than the supernova measurements, at 67 km/s per megaparsec with an experimental error of less than 1 percent. That puts the two measurements in conflict. Planck’s result, reported in a paper published online May 10 at arXiv.org, is based on measurements of the cosmic microwave background radiation, ancient light that originated just 380,000 years after the Big Bang.

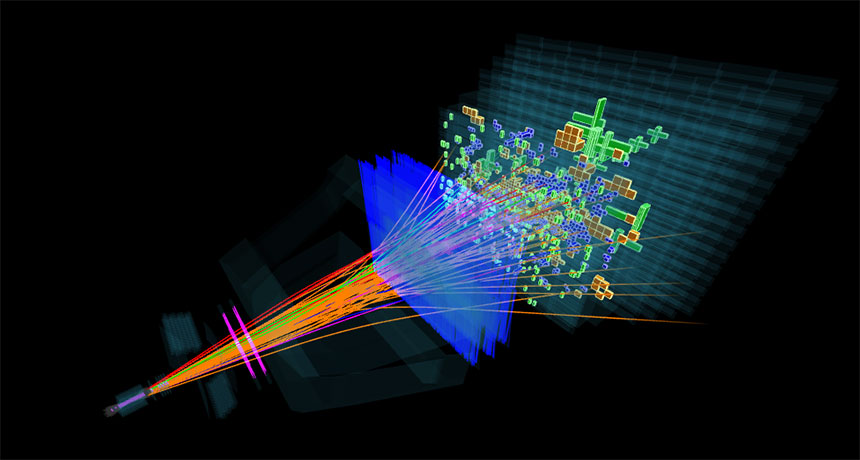

And now, another team has weighed in with a measurement of the Hubble constant. The Baryon Oscillation Spectroscopic Survey also reported that the universe is expanding at 67 km/s per mega-parsec, with an error of 1.5 percent, in a paper posted online at arXiv.org on July 11. This puts BOSS in conflict with the supernova measurements as well. To make the measurement, BOSS scientists studied patterns in the clustering of 1.2 million galaxies. That clustering is the result of pressure waves in the early universe; analyzing the spacing of those imprints on the sky provides a measure of the universe’s expansion.

Although the conflict isn’t new (SN: 4/5/14, p. 18), the evidence that something is amiss has strengthened as scientists continue to refine their measurements.

The latest results are now precise enough that the discrepancy is unlikely to be a fluke. “It’s gone from looking like maybe just bad luck, to — no, this can’t be bad luck,” says the leader of the supernova measurement team, Adam Riess of Johns Hopkins. But the cause is still unknown, Riess says. “It’s kind of a mystery at this point.”

Since its birth from a cosmic speck in the Big Bang, the universe has been continually expanding. And that expansion is now accelerating, as galaxy clusters zip away from one another at an ever-increasing rate. The discovery of this acceleration in the 1990s led scientists to conclude that dark energy pervades the universe, pushing it to expand faster and faster.

As the universe expands, supernovas’ light is stretched, shifting its frequency. For objects of known distance, that frequency shift can be used to infer the Hubble constant. But measuring distances in the universe is complicated, requiring the construction of a “distance ladder,” which combines several methods that build on one another.

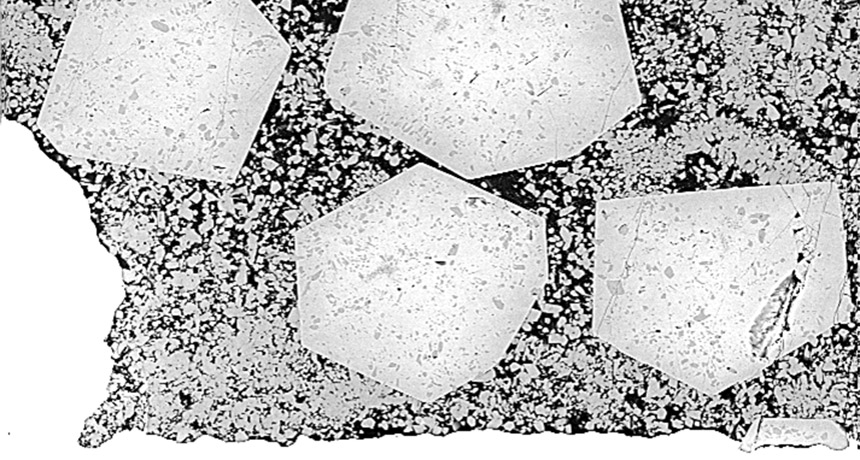

To create their distance ladder, Riess and colleagues combined geometrical distance measurements with “standard candles” — objects of known brightness. Since a candle that’s farther away is dimmer, if you know its absolute brightness, you can calculate its distance. For standard candles, the team used Cepheid variable stars, which pulsate at a rate that is correlated with their brightness, and type 1a supernovas, whose brightness properties are well-understood.

Scientists on the Planck team, on the other hand, analyzed the cosmic microwave background, using variations in its temperature and polarization to calculate how fast the universe was expanding shortly after the Big Bang. The scientists used that information to predict its current rate of expansion.

As for what might be causing the persistent discrepancy between the two methods, there are no easy answers, Kamionkowski says. “In terms of exotic physics explanations, we’ve been scratching our heads.”

A new type of particle could explain the mismatch. One possibility is an undiscovered variety of neutrino, which would affect the expansion rate in the early universe, says theoretical astrophysicist David Spergel of Princeton University. “But it’s hard to fit that to the other data we have.” Instead, Spergel favors another explanation: some currently unknown feature of dark energy. “We know so little about dark energy, that would be my guess on where the solution most likely is,” he says.

If dark energy is changing with time, pushing the universe to expand faster than predicted, that could explain the discrepancy. “We could be on our way to discovering something nontrivial about the dark energy — that it is an evolving energy field as opposed to just constant,” says cosmologist Kevork Abazajian of the University of California, Irvine.

A more likely explanation, some experts say, is that a subtle aspect of one of the measurements is not fully understood. “At this point, I wouldn’t say that you would point at either one and say that there are really obvious things wrong,” says astronomer Wendy Freedman of the University of Chicago. But, she says, if the Cepheid calibration doesn’t work as well as expected, that could slightly shift the measurement of the Hubble constant.

“In order to ascertain if there’s a problem, you need to do a completely independent test,” says Freedman. Her team is working on a measurement of the Hubble constant without Cepheids, instead using two other types of stars: RR Lyrae variable stars and red giant branch stars.

Another possibility, says Spergel, is that “there’s something missing in the Planck results.” Planck scientists measure the size of temperature fluctuations between points on the sky. Points separated by larger distances on the sky give a value of the Hubble constant in better agreement with the supernova results. And measurements from a previous cosmic microwave background experiment, WMAP, are also closer to the supernova measurements.

But, says George Efstathiou, an astrophysicist at the University of Cambridge and a Planck collaboration member, “I would say that the Planck results are rock solid.” If simple explanations in both analyses are excluded, astronomers may be forced to conclude that something important is missing in scientists’ understanding of the universe.

Compared with past disagreements over values of the Hubble constant, the new discrepancy is relatively minor. “Historically, people argued vehemently about whether the Hubble constant was 50 or 100, with the two camps not conceding an inch,” says theoretical physicist Katherine Freese of the University of Michigan in Ann Arbor. The current difference between the two measurements is “tiny by the standards of the old days.”

Cosmological measurements have only recently become precise enough for a few-percent discrepancy to be an issue. “That it’s so difficult to explain is actually an indication of how far we’ve come in cosmology,” Kamionkowski says. “Twenty-five years ago you would wave your hands and make something up.”